What is linguistics after all? Is it just the language? The answer to this question would be “no”. Linguistics is the science behind the construction of a language and not the language itself. You would wonder, why am I talking about linguistics and language science; this is because in my role as a Conversation Designer at AutomataPi Solutions, I design virtual agents that understand human language. Designing anything would mean constructing it right from scratch, but why construct/design language that a virtual agent understands?

The reason is simple; virtual agents are different from human beings, and it may not be easy for virtual agents to understand everything as humans do. For example – a conversation involves not only the language but also other factors like body language, eye contact, tone, intonation, etc. which is a difficult input for machines.

Though initially conversations with the computer were restricted to command-based expressions, now with the evolution of machines, the human-machine interface is much more advanced. For that to happen, the machine needs to be trained like a human learns a language.

Take a look at this sentence – “I never said she stole my money”.

It can have seven different meanings depending on the word that is emphasized.

1. I never said she stole my money (someone else did)

2. I NEVER said she stole my money (I would never say that)

3. I never SAID she stole my money (implied)

4. I never said SHE stole my money (I said someone stole, I didn’t point fingers)

5. I never said she STOLE my money (she isn’t giving my money back)

6. I never said she stole MY money (she stole the money, I didn’t say it was mine)

7. I never said she stole my MONEY (she might have stolen something else though.)

This shows the complexity of the language, also, stressing the syllable/word is more of a verbal/spoken phenomenon than a written one. How does a bot understand this?

Let’s take a look at another example –

A scripted bot may look somewhat like this –

Bot Language (only product specific bot)

Bot – Hello, how can I help you?

User – I want to know about your products

Bot – ok, these are the products that we offer….

User – ok, tell me about the weather outside

Bot – I am sorry I can’t help you with that.

Human language

Hello, how can I help you?

I want to know about your products

Ok, these are the products that we offer….

Ok, tell me about the weather outside

Oh, it is extremely hot today

With such complexities that come along with this language, how can one design an open-ended, non-scripted bots?

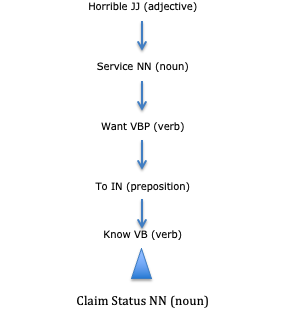

Take a look at this sentence the way linguists would…

For a machine which is not trained to understand natural language, it would be difficult for it to understand the slang, dialects and other nuances of language.

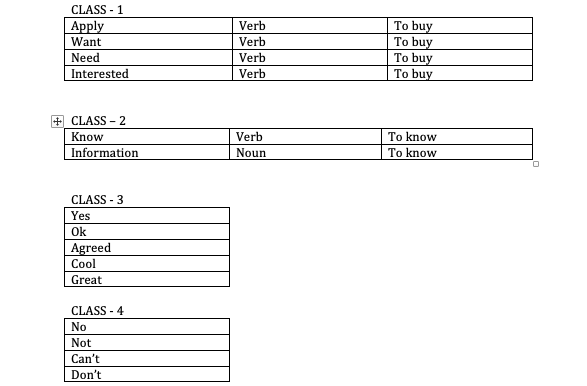

I would have to input the same, in the machine, quite close to feeding-in a list of verbs, adjectives, intensifiers along with affirmations and negations.

Divide each of these POS entities according to their domain for the machine to understand the context in which they are spoken.

Bank –

1. Financial institution

2. Bank of a river

Fall –

1. Losing one’s balance

2. Fall is also a season

Hence grouping synonyms belonging to a particular word class is important.

Example – Domain – LOAN

Class 4 + Class 1 + loan (noun) = don’t want a loan

The computer/machine will perceive the input as VB_negation + VB_interest + NN_entity. So in this case, it’s better to break the components down as opposed to feeding in phrases, as language is a subjective entity and hence there can be millions of permutations and combinations of phrases.

There are certain cases where phrase training would take precedence over breaking down components. The next blog would aim at explaining the same.

REFERENCES:

Source – mostlikelyloveyou